Editor’s note: This essay is an entry in Fordham’s 2023 Wonkathon, which asked contributors to answer this question: “How can we harness the power but mitigate the risks of artificial intelligence in our schools?” Learn more.

I am a high school teacher. The release of ChatGPT to the public in November of 2022 was a flashbulb moment for me. Not only was it clear that generative artificial intelligence (GenAI) is a consequential and transformative technology, it was in my students’ hands, immediately available, pervasive, rapidly changing, and much more powerful than the narrow AI tools that preceded it.

It has been almost a year since that flashbulb moment. Within that time frame, ChatGPT has been upgraded and equipped with an ever-expanding suite of plugins, internet browsing capability, and integrated image generation. It has been packaged into an app to have a voice and give haptic feedback. The capabilities and rate of change of GenAI are staggering, as is the sheer number of large language models (LLMs) and associated AI tools.

Further complicating matters, the approaches taken by GenAI companies and the policies they have created are inconsistent. For example, Anthropic’s approach towards democratizing AI has been through gradual implementation of collective constitutional AI, whereas Meta’s approach was the abrupt release of the source code for some of its LLMs. In terms of age restrictions, my tenth grade students may sign up for a ChatGPT account with parental permission, but not a Google Bard account. Mirroring this lack of consistency within the tech community, policies and guidelines among and within schools can vary widely.

Why? Generative AI is much bigger than a “technology thing,” it is not just some new website or software package. It is a sea change. It affects all stakeholders.

A sea change (with murky waters)

AI can open up new avenues for individualized instruction through several paradigms: AI-directed (learner as recipient), AI-supported (learner as collaborator), or AI-empowered (learner as leader). Intelligent tutoring systems (ITS) as knowledgeable, personalized, 24/7 learning allies with seemingly infinite patience make addressing Benjamin Bloom’s 2 Sigma Problem seem not only possible, but an educational milestone that’s shockingly close in my own classroom. But GenAI goes even further, as abilities in planning, data analysis, communication, and workflow can be augmented. If prompted effectively, AI can assist in these tasks (and more) across a range of collaborative roles—teacher’s aide, student helper, administrative assistant, assistant coach, parent liaison—impacting every stakeholder in an educational community.

But the pitfalls associated with GenAI are extremely problematic. Deskilling, academic dishonesty, privacy concerns, bias, and misinformation can cause tangible harm to an educational community and to the individuals that comprise it. For example, in my science classroom, asking AI to generate an image of an “intellectual scientist” can yield significantly biased results:

Images created by DallE2, via Stable Diffusion Bias Explorer.

There is value in such teachable moments with students, an opportunity to explore “isms” and adversity in the field of science and beyond. However, students encountering such overtly biased outputs without adequate context or guidance is cause for worry.

Human-centric solutions

Generative AI is a sea change rife with significant challenges. It will profoundly impact education at every level. It will affect those that support the mission of education and those that deliver it. Therefore, responding to it effectively and ethically is the responsibility of every stakeholder, namely, policymakers and organizations, school communities, and individuals like teachers, students, and parents. Engaging all stakeholders in the dialogue and decision-making process is essential if we wish to harness the power of GenAI responsibly while mitigating its risks. What follows is a series of suggestions for how different stakeholders can address this sea change head on.

Addressing GenAI at the classroom/individual level:

- Norm with students: It is critical to explore, discuss, and co-create expectations around use of GenAI. This empowers students and can yield an inclusive set of guidelines.

- Pursue professional development (pd) opportunities: In-house PD, webinars, conferences, and courses can equip teachers with more tools to address the impacts of AI in education.

- Promote AI literacy: Resources, such as the recently released AI literacy videos from Code.org, should be widely utilized.

- Share findings/resources: GenAI technologies have the potential to address equity concerns and democratize aspects of education. Consider sharing AI related knowledge and resources with peers. Lilach and Ethan Mollick’s prompt libraries for education and AI videos are good examples.

- Engage with others around this topic: Discuss, give guidance, share feedback, and support/organize community engagement initiatives around AI in education.

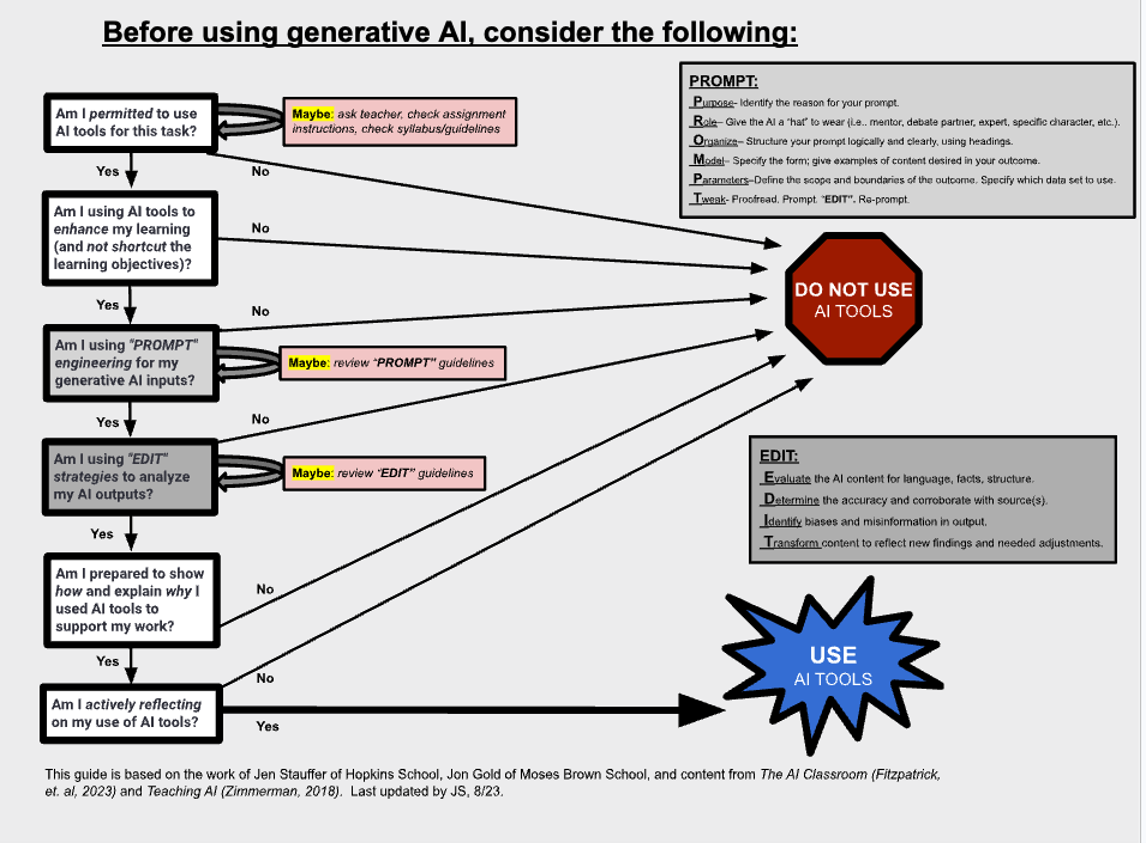

- Instill mindfulness and good habits around AI: Structured learning opportunities about and with AI technology are needed so as to learn how to leverage it ethically and effectively. All AI users should reflect frequently on decision making and AI use strategies. An AI decision tree, such as the one I created for my students, can be helpful in this regard:

Addressing GenAI at the school/district level:

- Draft an AI strategic plan: This plan should act as a roadmap for addressing AI literacy, evaluation of EdTech partnerships, adapting to AI-driven teaching and learning changes, futureproofing, and sustainability.

- Form AI focus groups: Through such groups, faculty, staff, parents, and students can provide valuable insights to administrators as they consider aspects of an AI strategic plan and formulate school policy.

- Curate resources for stakeholders: Determine which AI models and tools are optimal, offering clear rationale. Provide context in the form of background information related to their use.

- Formulate policies around transparent AI usage and AI privacy: These are necessary to ensure disclosure and clear lines of accountability; awareness, consent, data collection limitations, data anonymization are needed considerations in such policies.

- Perform frequent AI impact assessments: Take stock regularly, auditing for bias and adherence to policies by community members and vendors, and adjust the strategic plan and AI policies accordingly.

- Participate in or create opportunities for community engagement: Initiatives such as MIT’s Responsible AI for Social Empowerment and Education (RAISE) are crucial in bridging the gap between technological advancements and educational inclusivity.

Addressing GenAI at the policymaker/organization level

- Aim for human-centered AI: Be guided by all stakeholders, not exclusively by technology companies. A human-centric approach is outlined in Artificial Intelligence and the Future of Teaching and Learning by the U.S. Department of Education’s Office of Educational Technology and in UNESCO’s Guidance for Generative AI in Education and Research. Educators, families, students, and communities should help guide adoption of AI tools as their inclusion and contextual expertise is of critical importance. Inclusive development and implementation can help ensure AI tools reflect educational values.

- Require proof of efficacy and fairness from vendors: Accountability focused on vendors, tools, and district implementation may encourage development of more effective, unbiased GenAI. Privacy violations and algorithmic bias can be addressed through strong data policies, consent processes, transparency requirements, and bias evaluations. Request (or require) AI vendors to adhere to a code of conduct. Consider reviewing AI vendors and tools, certifying those that conform to certain standards.

- Be Guided by AI research: Stay abreast of ongoing research by groups such as the Human-Centered Artificial Intelligence Institute at Stanford University. Re-evaluation will be essential as AI technologies evolve. With thoughtful policies and practices, AI’s augmentation potential can be realized while centering the humans AI aims to serve.