We know from international data—PISA, TIMSS, and so on—that other countries produce more “high achievers” than we do (at least in relation to the size of their pupil populations). And it’s no secret that in the U.S., academic achievement tends to correlate with socioeconomic status, hence producing far too few high achievers within the low-income population. But is this a uniquely American problem? How do we compare to other countries?

To begin to answer these questions, Chester Finn and I looked more closely at the PISA 2012 results (in conjunction with a study we’re conducting on how other advanced countries educate their high-ability students). The OECD has a socioeconomic indicator it uses in connection with PISA results called the Index of Economic, Social, and Cultural Status (ESCS). Like most SES gauges that depend heavily on student self-reporting, it’s far from perfect, but to the best of our knowledge it’s no worse than most. In any case, it’s one of the few socioeconomic indicators that allow for cross-national education comparisons. It is derived from parents’ occupational status, educational level, and home possessions,[1] and it can be split into quartiles for a given country, wherein the bottom quarter has the lowest SES and the top quarter has the highest.

PISA results are reported, inter alia, according to seven proficiency levels, ranging from zero to six. Levels 5 and 6 are the highest. To get a feel for this demarcation, approximately 9 percent of U.S. test takers scored at level 5 or above (roughly 16 percent did the same in Canada, 15 percent in Finland, and 55 percent in Singapore).

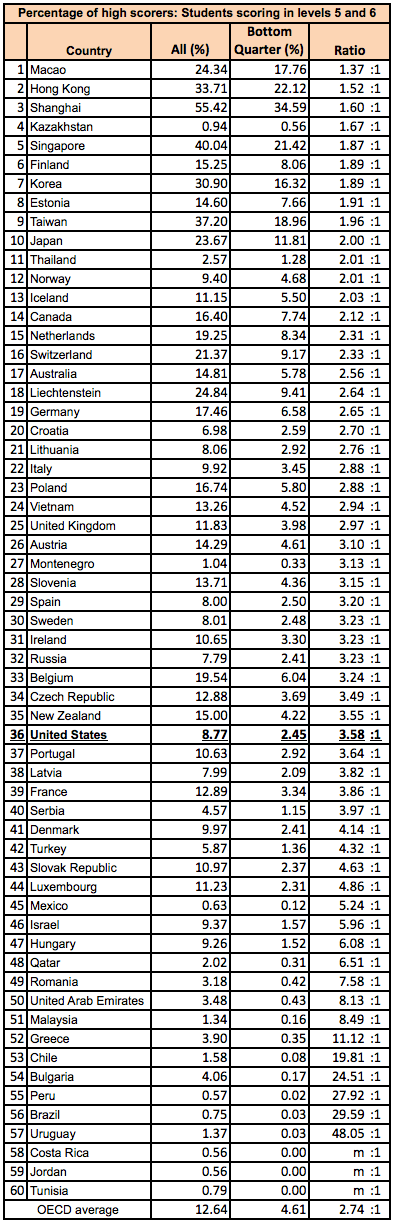

Then we looked at high achievers within the lowest ESCS quartile. The table below puts these pieces together. The first data column shows the percentage of all PISA test takers in a given country who scored at level 5 or 6. The next shows the percentage of kids in the bottom quartile who scored at these levels.[2] The last column is a ratio of those two percentages. (For example, the U.S. had approximately 8.77 percent of all test takers score at level 5 or 6, but just 2.45 percent of those in the lowest SES quartile did, so the U.S. has a 3.58:1 ratio.)[3]

It turns out that the U.S. is really bad at producing high-achieving students from low-SES circumstances. The average U.S. student is roughly four times more likely to be a top scorer than is a low-SES student.

Moreover, the U.S. also does a comparatively lousy job, especially when compared to those countries that we might view as competitors. In fact, in a funny twist of fate, this degree of failure is a common theme: we also ranked thirty-fifth for mean math scores, thirty-fifth for top math scorers (90th percentile scores in a given country), and thirty-second for high-SES students.

So why does America produce so few high-achieving students from low-income backgrounds? PISA’s SES index is most useful as an intra-country measure. (Comparing degrees of poverty in different countries is complicated, “fuzzy” business.) Therefore, the data don’t really speak to the magnitude of our students’ disadvantage or of our income inequality, compared to other countries. But we can surmise that both factors contribute to this failure. We might also simply be unusually bad at helping low-SES high achievers.

Whatever the answer is, a couple facts remain: The U.S. is bad at maximizing the potential of high-achieving, low-income students. And that shouldn’t surprise anyone.

Editor's Note: This post was updated on August 19, 2015, to correct data errors.

[1] Here is a more detailed explanation of ESCS:

The PISA index of economic, social and cultural status (ESCS) was derived from the following three indices: highest occupational status of parents (HISEI), highest educational level of parents in years of education according to ISCED (PARED), and home possessions (HOMEPOS). The index of home possessions (HOMEPOS) comprises all items on the indices of WEALTH, CULTPOSS and HEDRES, as well as books in the home recoded into a four-level categorical variable (0–10 books, 11–25 or 26–100 books, 101–200 or 201–500 books, more than 500 books).

The PISA index of economic, social and cultural status (ESCS) was derived from a principal component analysis of standardised variables (each variable has an OECD mean of zero and a standard deviation of one), taking the factor scores for the first principal component as measures of the PISA index of economic, social and cultural status.

Principal component analysis was also performed for each participating country or economy to determine to what extent the components of the index operate in similar ways across countries or economy. The analysis revealed that patterns of factor loading were very similar across countries, with all three components contributing to a similar extent to the index (for details on reliability and factor loadings, see the PISA 2012 Technical Report (OECD, forthcoming).

The imputation of components for students with missing data on one component was done on the basis of a regression on the other two variables, with an additional random error component. The final values on the PISA index of economic, social and cultural status (ESCS) for 2012 have an OECD mean of 0 and a standard deviation of one.

ESCS was computed for all students in the five cycles, and ESCS indices for trends analyses were obtained by applying the parameters used to derive standardised values in 2012 to the ESCS components for previous cycles. These values will therefore not be directly comparable to ESCS values in the databases for previous cycles, though the differences are not large for the 2006 and 2009 cycles. ESCS values in earlier cycles were computed using different algorithms, so for 2000 and 2003 the differences are larger.

[2] OECD analyst Pablo Zoido compiled the quartile score data.

[3] An “m” in the ratio column means that a country had zero low-SES test takers score at level 5 or 6.