In December, a workgroup established by the State Board of Education released a number of policy recommendations related to dropout-recovery charter schools. Representing a modest but not insignificant portion of Ohio’s charter sector, these schools enroll about 15,000 students, most of whom have previously dropped out or are at risk of doing so. The policies governing dropout-recovery schools have been much debated over the years—particularly their softer alternative report card—and this report follows on the heels of a 2017 review of state policy.

In general, the workgroup’s report offers recommendations that would relax accountability for dropout-recovery schools, most notably in the area of report cards. Though disappointing, that’s not surprising; save for two seats held by state board members, the entire work group comprised representatives from dropout-recovery schools. Regrettably, they seem to have used their overwhelming influence to push ideas that would make their lives a bit more comfortable but not necessarily support students’ academic needs.

In a pointed response to the workgroup report, state superintendent Paolo DeMaria rightly expressed concern. He wrote:

As might be expected of a process that was somewhat one-sided, the recommendations contained in the Work Group report favor loosening regulations of schools operating dropout prevention and recovery programs.... Consequently, the Work Group process reflects members of a regulated educational sector given carte blanche to design their own regulations, resulting in a decidedly one-sided report.

Although the workgroup claims that “high standards must be maintained for at-risk students,” its proposals would significantly lower the standards for dropout-recovery schools. Specifically, their suggestions include:

- Exclude certain students who are behind in credit accumulation from the test passage and graduation measures.

- Reduce the weight on the test-passage component in the overall ratings.

- Replace the student growth measure[1] with one that instead considers course credits.

- Create a “life readiness” component that awards credit when students complete just one among a menu of undefined activities, such as service learning, career-based intervention, or participating in career-technical education.

- Create a “culture” component that looks at various “inputs” such as whether schools engage in a community-business partnership, offer professional development, administer parent/student surveys, and more.

These recommendations are unsettling for several reasons.

First, excluding certain students from accountability measures undermines the basic principle that all students can learn, and it contradicts a central tenant of accountability: that schools should be incentivized to pay attention to—not ignore—students struggling to achieve state goals. Ohio already relaxes report card standards on the test-passage and graduation components, and excluding students would further erode accountability for their academic outcomes.

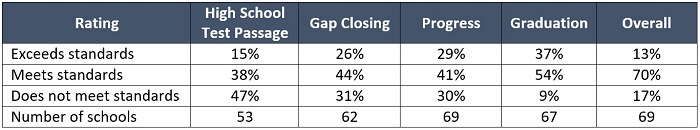

Second, the proposal to reduce the weight on the achievement-based component could be seen as an effort to inflate overall ratings. On the four current components on the dropout-recovery report card, the High School Test Passage component is the most stringent: 15 percent of schools are rated “exceeds standards”; 38 percent receive “meets standards”; and 47 percent get “does not meet standards.” As the table below shows, the ratings on the other components are generally higher. Consequently, the overall ratings of a number of schools would rise even though performance hasn’t actually improved.

Table 1: Distribution of overall and component ratings for dropout-recovery schools, 2018–19

Source: Ohio Department of Education. Note: The weights used to calculate the Overall rating are as follows: high school test passage, 20 percent; gap closing, 20 percent; progress, 30 percent; and graduation, 30 percent. For more information about the dropout-recovery report card, see here.

Third, the replacement of an objective, assessment-based progress component with a course-credit driven measure would make school quality harder to evaluate. The growth rating is especially critical when schools serve students who are behind, and its removal would leave communities and authorizers less able to gauge academic performance. In contrast, relying on credits is unlikely to yield credible information about student learning. Unless the state imposes very strict rules around awarding course credit, the measure could be easily gamed by low-quality providers using dubious forms of credit recovery.

Fourth, akin to course credits, the two new dimensions—the life-readiness and culture components—would also be open to gaming and abuse. Without clear definitions—and the workgroup doesn’t suggest any—activities such as “service learning” or a “community-business partnership” could mean almost anything. These components also shift the focus from key measures of achievement and growth, potentially masking student outcomes in favor of check-box activities.

***

In a sense, the dropout-recovery workgroup engaged in what one might term “regulatory capture,” whereby the ones being regulated attempt to write the rules. But policymakers also need to realize that the potential for mischief is not unique to dropout-recovery schools. In the broader debate over report cards, for instance, school associations and teachers unions have promoted policies that would weaken the accountability systems applying to the schools in which they work. Among these policies are the elimination of clear ratings, the use of opaque data dashboards, a reliance on input-based measures that could be manipulated to boost ratings, and the use of check-box indicators of participation in certain programs or activities.

School employee groups should certainly have their say in the policymaking process, as they are uniquely positioned to see problems and offer solutions. But we must be mindful that they—like those in the dropout-recovery workgroup—can use their privileged position in policy discussions to further interests that aren’t necessarily in the benefit of the broader public. Whether making policy for a broad swath of Ohio schools or a small segment of them, policymakers need to listen to a wide range of views.

[1] For the dropout-recovery report card, Ohio uses an alternative assessment of student growth based on the NWEA MAP test.