NOTE: The Thomas B. Fordham Institute occasionally publishes guest commentaries on its blogs. The views expressed by guest authors do not necessarily reflect those of Fordham.

“Gap closing” is one of Ohio’s six major school report card components. The ostensible purpose of the component is to provide the public with information on which schools are making progress in closing achievement gaps among Ohio’s most disadvantaged students. Gap closing, however, has long been criticized on multiple fronts: it does not actually measure the closing of achievement gaps; it is highly correlated with socioeconomic status; and biases Ohio’s accountability system toward rewarding achievement rather than growth. To these woes we can add another concern, those centered around gap closing’s newest sub-component: English language progress (ELP).

Traditionally, Ohio’s gap closing measure has assessed schools on how various subgroups—including those who are African American, Hispanic, or economically disadvantaged, or have special needs—perform in three areas: English language arts, math, and graduation rates. With the addition of ELP to gap closing in 2017–18, Ohio schools with English learner (EL) populations are now additionally evaluated based on those students’ performance on the Ohio English Language Proficiency Exam, an alternative assessment given to ELs.

The inclusion of ELs into the report card is required under federal law and shines a much-needed spotlight on the academic needs of one of the state’s fastest growing student populations. But while well-intentioned, the new ELP measure is imperfectly implemented. This post highlights three chief shortcomings.

First, the ELP measure relies on raw proficiency thresholds. Proficiency thresholds only give schools credit for students who achieve proficiency in a content area; no credit is assigned for moving students closer to proficiency. Second, the ELP measure effectively imposes all-or-nothing point thresholds on schools. Of the 780 schools that have been assessed on the measure since 2018, some 73 percent received the full 100 points, while another 25 percent received 0 points. Only 2 percent of assessed schools received partial points for the ELP sub-component. Finally, the contribution of ELP to the overall gap closing score and report card grades varies dramatically across schools, and for some schools weighs very heavily.

Taken together, these features add up to a system in which the performance of very small numbers of students can have disproportionately large effects, not only on a school’s ELP sub-component grade, but also on the entire report card grade. Importantly, the inclusion of ELP may benefit some schools, “inflating” their final report card score, while penalizing others. In this post, however, I focus on ELP’s potentially negative consequences because the point penalties imposed on schools are, on average, about three times as large as any potential bonuses.

Let’s take a closer look at how the various pieces of ELP fit together.

The use of raw proficiency thresholds and the largely all-or-nothing allocation of points are related, and they contribute to small numbers of students having potentially outsized effects on the ELP grade. The use of proficiency thresholds (rather than a proficiency index) means that ELP-assessed schools receive no credit for students who approach but fail to meet the proficiency standard; they only receive credit when students “pass” the state’s EL assessment.[1] The ELP measure additionally tends toward an all-or-nothing points structure because ELP assigns points based on the performance of a single subgroup: English learners. Unlike the other gap closing measures, sub-par performance by EL students cannot be counter-balanced by the performance of another group.[2] For all these reasons, then, the performance of a very small number of EL’s can mean the difference between a 0 and 100 score on ELP.

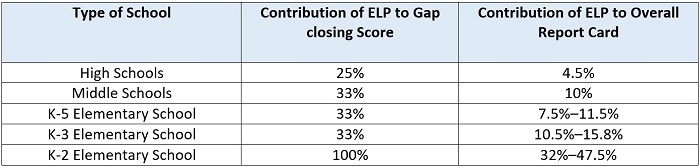

Of course, any accountability system necessarily imposes some types of thresholds. The system described above might be less troubling if the ELP measure played only a modest role in report card scores. But now we come to a third feature of ELP: its relatively heavy weighting in the overall gap closing score and, as a result, on the overall report card grade. As highlighted in Table 1, for most schools being held accountable for ELP gap closing, the performance of English learners constitutes one-third to one-quarter of the entire gap closing grade, but in rare circumstances it can comprise as much as 100 percent. This difference is due to the fact that not all schools are assessed on all possible components of gap closing.[3] Furthermore, because the Ohio report card system weights the overall gap closing component differently depending on a school’s population and grade configuration, gap closing itself can account for anywhere between 4.5 and 47.5 percent of a school’s overall report card grade. Somewhat oddly, there is currently no correlation between the weighting of ELP in a school’s overall report card grade and the percent EL students in a given school population.

Table 1: Contribution of ELP to Gap closing Score and to Overall Report Card Score

Why does this matter? The use of proficiency thresholds and the preponderance of all-or-nothing point allocations, when combined with the relatively heavy weighting of the ELP sub-component, creates an accountability system which permits dramatic shifts in gap closing scores and even overall report card scores based on the performance of a very small number of students.

For starters, Ohio’s system mechanically permits a school to be penalized up to four letter grades on the entire Gap Closing measure based on the failure of a single EL student to make adequate progress. This, for example, was the experience of New Albany Middle School in New Albany-Plain Local School District in 2019. Because the school missed that year’s ELP proficiency threshold of 51 percent by one student, it received 0 points for the ELP gap closing sub-component. Its overall gap closing grade, which would have been a 100 (an A) without ELP, was reduced to a 66.7—a D. The story did not end here, though. The school’s D grade on gap closing, in turn, had effects on its overall report card grade, which was reduced by nearly 0.98 points, lowering the letter grade from an A to a B.

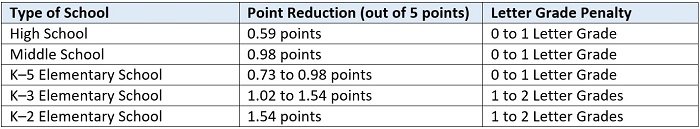

Curious about the broader picture—in particular, how much a single student’s ELP performance could affect the overall report card grade of two otherwise identical schools—I created a dataset containing all possible combinations of report card scores for the achievement, progress, K–3 literacy, graduation rates, and Prepared for Success components. I then simulated the final report card grade for two schools with identical profiles except for their ELP gap closing measure: One school passes the threshold and receives 100 points for the sub-component, while the other fails by one student and receives 0 points. Results are summarized in Table 2.

Table 2: How Much Can One Student’s Performance in ELP Gap Closing Affect a School’s Overall Report Card Grade?

The simulation suggests that, based on the performance of a single EL student, a school can earn an overall report card grade that is as much as two letter grades lower as an otherwise identical school. Consistent with the findings of Table 1, Ohio’s small number of early-learning elementary schools are the hardest hit because gap-closing comprises nearly half of their overall report card grade. Middle and K–5 elementary schools, which are graded on three to four report card components, can easily see their report card grade fall by an entire letter grade. High schools are the least vulnerable, but depending on how close they are to the grade threshold, they can also see their grades reduced by a full letter grade.

****

Ohio’s 55,000 K–12 EL students deserve access to high-quality English language programs. They deserve the opportunity to achieve English proficiency and to fully participate in their schools’ broader academic programming. At the same time, however, Ohio communities deserve an accountability system which provides meaningful differentiation of school performance. Because of ELP’s flaws, Ohio’s current report card system fails this standard.

Ohio policymakers should return to the drawing board and construct an ELP measure that encourages districts to attend to the language acquisition needs of the state’s ELs and provides a fair assessment of their progress in moving students toward language proficiency.

[1] Schools can be granted partial improvement for credit, but thus far only a very small percentage of schools have qualified. A fuller treatment of this point, and of other issues discussed in this post, is available here.

[2] Technically, this criticism applies to all subgroup-level gap-closing measures, but the flaw is amplified in the ELP measure because the subgroup measure (EL performance on OELPA) is the only “input” into the sub-component (ELP gap closing). In contrast, in ELA and math gap-closing, the median number of subgroups assessed is four (and can be as high as ten). The final sub-component score for ELA and math takes the average score of all sub-groups.

[3] Elementary schools, for example, cannot be held accountable for graduation rates. K–2 schools are not subject to state testing in ELA and math, and hence are not held accountable for most achievement and progress indicators.