This week the Columbus Dispatch ran an article about recently released performance ratings of some 7,500 teachers in central Ohio, including those in Columbus City Schools and South-Western City Schools. Note, while the ratings – which identify teachers’ impact on student learning last year (in grades 3 through 8) – are “part of a larger movement to revamp the way Ohio judges teacher quality,” they are not part of the mandate to adopt a comprehensive teacher evaluation system by July 2013 as required by the state budget. (When those teacher evaluation systems go into effect, they must categorize teachers into four tiers of effectiveness: accomplished, proficient, developing, and ineffective, and student growth will only count for half of the rating. Multiple other measures will also factor into the rating.)

It’s unclear from this article how the ratings were determined exactly (how much student growth differentiates a “most effective” teacher over an “above average” one?), but one thing is clear: there are far more teachers delivering adequate or excellent student gains than not. And, despite all the alarm among educators and unions about using student growth to evaluate teachers’ performance, the breakdown of ratings – in the aggregate – seems pretty intuitive. And they’re probably more accurate, inasmuch as the distribution of teachers is more spread out than in the current binary rating system under which 98 percent of teachers are “satisfactory.”

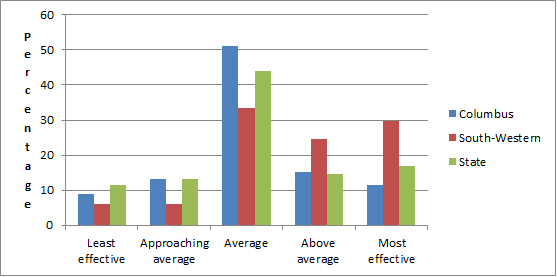

In Columbus City Schools, for example, 27 percent of teachers (in reading and math for grades 3-8, considering only student value-added data and no other measures) were above average while 22 were below. A full 51 percent were “average” or delivering about a year’s worth of gains. Only 9 percent were “least effective.” That means in a school of 22 teachers, only about two would really be on the bottom-most rung. For those of us know have worked in schools before – especially urban public schools – that number feels accurate. Certainly two teachers in each school are minimally effective, while many more are good or even great.

The percentages for Columbus and South-Western are broken down below in a graph, which also includes the state average.

Percent of teachers by rating (2010-11)

This mirrors what one might expect in any sector, not just education, when it comes to job performance. And it aligns with what Mike Miles, superintendent of Harrison School District 2 in Colorado, shared with central Ohio superintendents and northern Ohio school district leaders and others last month when he outlined his district’s reforms to evaluation and compensation. The goal, of course, is to move more teachers along the quality spectrum toward maximum effectiveness. But in reality, most will congregate somewhere in the middle. (South-Western is the exception in this graph, with many more teachers above average than the breakdown for the entire state.)

As the article points out, this represents only one year of data and for a small subset of teachers (in grades 3-8). It goes on to quote Columbus Education Association president Rhonda Johnson, who says that these ratings are “only a fraction” and don’t “tell the whole picture.” This is true, which is why the other 50 percent of teacher evaluations matters so much. The state budget put in law the requirement for districts to base half of a teacher’s evaluation on student growth, but districts may come up with other indicators to fill out the remaining 50 percent. This might include commitment to one’s school community, “professionalism” (which could account for timeliness and interactions with parents), and of course – classroom observations.

Despite the clarion call that student test scores shouldn’t be the sole determiner of a teacher’s rating, I’ve yet to hear of this being proposed anywhere as a legitimate policy option. Of course there should be multiple measures that are fair, transparent, robust, consistent, and accurate. But teachers and unions would be hard-pressed to admit that when it comes to the distribution of themselves and their colleagues along a quality spectrum, that value-added results like the ones released this week are already more accurate than what current systems depict.