Titles and descriptions matter in school rating systems. One remembers with chagrin Ohio’s former “Continuous Improvement” rating that schools could receive even though their performance fell relative to the prior year. Mercifully, the state retired that rating (along with other descriptive labels) and has since moved to a more intuitive A–F system. Despite the shift to clearer ratings, the name of one of Ohio’s report card components remains a head-scratcher. As a study committee reviews report cards, it should reconsider the name of the “Gap Closing” component.

First appearing in 2012–13, Gap Closing looks at performance across ten subgroups specified in both federal and state law: All students, students with disabilities, English language learners, economically disadvantaged students, and six racial and ethnic groups. The calculations are based on subgroups’ performance index and value-added scores, along with graduation rates. These subgroup data serve the important purpose of shining a light on students who may be more likely to slip through the cracks.

However, the Gap Closing title suffers from two major problems. First, it implies that schools are being rated based solely on the performance of low-achieving subgroups that need to catch up. But that’s not true. A few generally high-achieving subgroups are also included in the calculations—Asian and white students, most notably. Second, given the component’s name, one would expect to see achievement gap reductions among districts receiving top marks and the reverse among those with widening gaps. Yet as we’ll see, that is not necessarily the case.

To illustrate the latter point in more detail, let’s examine whether gaps (relative to the state average) among economically disadvantaged (ED) and black students are closing by districts’ Gap Closing rating. These subgroups are selected because gaps by income and race are prominent in the education debates, and are likely what comes to mind when people see the term Gap Closing. Of course, this isn’t a comprehensive analysis. Districts struggling to close one of these gaps may have more success with other subgroups. Nevertheless, it’s still a reasonable test to see whether the component is doing as advertised.

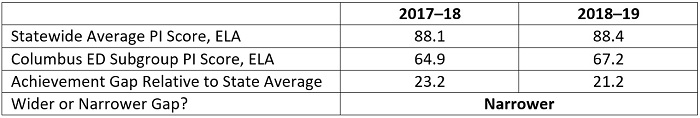

There are multiple ways to measure an “achievement gap.” For simplicity, I choose to examine subgroup gaps compared to the statewide average of all students. Table 1 illustrates the method, using the ELA performance index (PI) scores—a composite measure of achievement—for ED students attending the Columbus school district. In this case, the district is narrowing the achievement gap: It had a 21.2 point gap in 2018–19 versus a 23.2 point gap in the previous year.[1]

Table 1: Illustration of an achievement-gap closing calculation

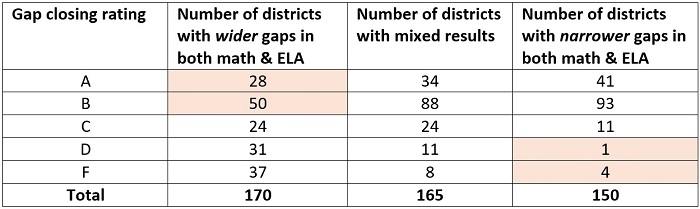

So are highly rated districts on Gap Closing actually narrowing their achievement gaps? And do poorly rated districts see wider gaps over time? The answer is “sometimes, but not always.” Consider the ED subgroup data shown in Table 2. Seventy-eight districts received A’s and B’s for Gap Closing even though their ED subgroup gaps widened in both math and ELA from 2017–18 to 2018–19. On the opposite end, we see that five districts received D’s and F’s despite narrowing gaps for their ED students. The “mixed results” column shows districts that had a narrower gap in one subject but wider gaps in the other.

Table 2: Results for the ED subgroup

* 123 districts were excluded because they either enrolled too few ED students, or their ED subgroup score was higher than the state average in both 2017–18 and 2018–19 (i.e., there was no gap to evaluate in both years).

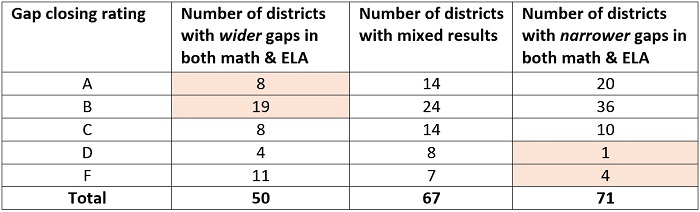

Table 3 displays the results for the black subgroup. Again, we see cases in which the Gap Closing rating does not correspond to achievement gap data. Despite a widening gap for black students in both subjects, twenty-seven districts received A’s or B’s on this component. Conversely, five districts received D’s and F’s even though their gap narrowed compared to the prior year.

Table 3: Results for the black subgroup

* 420 districts were excluded because they either enrolled too few black students, or their black subgroup score was higher than the state average in both 2017–18 and 2018–19 (i.e., there was no gap to evaluate in both years).

* * *

These results should give us pause about whether Gap Closing is the right name. To address the disconnect, Ohio has three main options, two of which are ill-advised (option 1 and 2 below). The third option—simply changing the name—is the one that state policymakers should adopt.

Option 1: Punt. Policymakers could eliminate the component. But this would remove a critical accountability mechanism that encourages schools to pay attention to students who most need the extra help. It might also put Ohio out of compliance with federal education law on subgroups.

Option 2: Change the calculations. The disconnect in some districts’ results may reflect the reality that Gap Closing does not directly measure achievement gap closing or widening. Ohio could tie the calculations more closely to the name, but there are significant downsides to this alternative.

- First, the best approach to measuring an “achievement gap” is not clear. Should we measure the gap between subgroups in relation to the district or statewide average? Or should we compare them to the highest-achieving subgroup in a district—or the highest in the state?

- Second, schools may face a perverse incentive to ignore higher achieving groups in an attempt to “lower the ceiling.” This would be especially problematic if local averages were used.

- Third, Ohio would need to figure out how to incorporate higher achieving subgroups. When no gaps exist, how do we measure “gap closing”?

Option 3: Change the name. Instead of trying to untangle the complications of measuring gap closing, a more straightforward approach is to change the name of the component. We at Fordham have suggested the term “Equity,” which would better convey its purpose and design. The component’s performance index dimension encourages schools to help all subgroups meet steadily increasing state achievement goals. And though not discussed above, the value-added aspect looks at whether students across all subgroups make solid academic growth. In general, these calculations reflect whether students from all backgrounds are being equally well-served by their schools. An “A” on Equity would let the public know that a district’s subgroups are meeting state academic goals, while an “F” would be a red flag that most or all of its subgroups are struggling academically.

Closing achievement gaps remains a central goal of education reform. Yet the Gap Closing language doesn’t quite work when it comes to a report card component name. To avoid misinterpretation, Ohio should change the terminology used to describe subgroup performance.